We are at the pediatrician’s office for an annual physical. The gowned offspring is holding my phone, reading a school-assigned book. The phone is also logged into a work meeting, which I’m listening to via AirPods. That same phone is also a hotspot for my laptop, which I’m using to write this. So there is a reason Apple is a trillion-dollar company, and I do hope they sort out their issues with China, lest we lose some of the magic.

Speaking of honeymoon periods and tech: Bing has become unusable, with slowdowns, plain unavailability, and the occasional gobbledygook. So — goodbye, Edge, it was nice while it lasted. Bard is faster, better-formatted, and available on Safari, to which I keep coming back.

As the holiday season approaches, let me note that a great use for LLMs, be it ChatGPT or Bard — and I’ve switched to Google’s lately — is for gift ideas. I now someone who likes DixIt and Mysterium, and it came up with Obscurio in seconds. It also pointed me to a good game for a tween who likes cats. For a six-year-old, too. And not a sponsored link in sight! Let’s see how long the honeymoon period lasts.

The proportion of genetics papers with autocorrect errors was estimated in 2020 to have reached 30 per cent. The Human Gene Name Consortium decided to rename the genes in question, wisely accepting that this would be easier than weaning researchers away from Excel.

At the intersection of science and technology lies the festering boil that is Microsoft Office.

My preferred m.o. on this blog is to write half-baked posts and never look back, but I left out an important piece out of yesterday’s comment on The Techno-Optimist Manifesto so there is now an update, along with a few corrections to spelling and style.

Technology as the last refuge of a scoundrel

Marc Andreessen, the billionaire venture capitalist, co-founder of Netscape, and occasional podcaster and blogger, wrote a bizarre post today titled The Techno-Optimist Manifesto, in which he first builds a straw man argument of the present-day’s luddite atmosphere using his best angsty adolescent voice (“We are being lied to… We are told to be angry, bitter, and resentful about technology… We are told to be miserable about the future…” all as separate paragraphs; you get the idea), then presents a series of increasingly ludicrous statements about our bright technological future that at the same time glorify the past, creating a Golden Age fallacy Möbius strip.

This is not Andreessen’s first act of incoherence: read or listen to last year’s Conversation with Tyler (Cowen) and his answer to Tyler’s question about the concrete advantages of Web 3.0 for podcasts (spoiler: he couldn’t name any). But that was an impromptu — if easily anticipated — question. Today’s Manifesto should be more baked, one would hope. But one would then be disappointed, as the entire article reads more like a cry for help than a well-reasoned essay. Here are some of the more flagrantly foul bullet points, with my comments below.

We believe that since human wants and needs are infinite, economic demand is infinite, and job growth can continue forever.

This is particularly salient for me after reading Burgis and Girard, and in short: no. Just no. Human desires are infinite, but not all desires are created equal. If your goal is to fulfill every human desire, you are not going to Hell with good intentions — you are intent on going to Hell.

We believe Artificial Intelligence can save lives – if we let it. Medicine, among many other fields, is in the stone age compared to what we can achieve with joined human and machine intelligence working on new cures. There are scores of common causes of death that can be fixed with AI, from car crashes to pandemics to wartime friendly fire.

We believe any deceleration of AI will cost lives. Deaths that were preventable by the AI that was prevented from existing is a form of murder.

A particularly pernicious pair of paragraphs that talks about AI as if it is currently able to save lives (it isn’t), and about people urging caution as if they are murderers (they aren’t). Doctors and biomedical researchers will be the first to welcome AI wholeheartedly into their professions, but that is mostly because too much of their professional time is spent fighting the bullshit that their technocratic overlords — say, IT companies funded by billionaire investors — have wrought upon them.

We believe that we are, have been, and will always be the masters of technology, not mastered by technology. Victim mentality is a curse in every domain of life, including in our relationship with technology – both unnecessary and self-defeating. We are not victims, we are conquerors (emphasis his).

The dichotomy is not master/victim, it is master/slave, and the only reason Andreessen would think that 21st century humans are not slaves to technology is that he doesn’t get around much. We can agree that humans are not victims, but then again, no one is arguing that humans are committing crimes against technology.

We believe in nature, but we also believe in overcoming nature. We are not primitives, cowering in fear of the lightning bolt. We are the apex predator; the lightning works for us.

And yet we don’t go around randomly setting stuff on fire. The tribes whose members did that either got rid of those members or else got extinguished.

We believe in risk, in leaps into the unknown.

Good for you. I believe in managing risk and exploring the unknown before leaping into it.

We believe in radical competence.

All I see is radical stupidity. See: you can put “radical” in front of anything and it makes you seem profound!

We believe technology is liberatory. Liberatory of human potential. Liberatory of the human soul, the human spirit. Expanding what it can mean to be free, to be fulfilled, to be alive.

It is! I was at an airport a few days ago and saw several double below-the-knee amputees who a few decades ago would have had a miserable time but can now walk around like nobody’s business. However, technology making up the difference to something that was there before is one thing — creating something completely new is a different beast altogether. The probability space is vast and full of landmines, and a Manifesto which praises leaps into the unknown without mentioning a single externality is foolish at best, dangerous at worst.

↬Baldur Bjarnason, who likened the philosophy espoused to fascism. It made me think of Nationalism of the Serbian kind, and a saying from a (far from perfect) Serbian politician that, whenever he heard the word “patriotism”, he’d start looking for his wallet. Well, “technology” is the patriotism of Silicon Valley bros, and we’d better start paying attention to our wallets.

Update: Typos fixed and style cleared up. I also forgot to note one of Andreessen’s more henious acts: naming the dead as Patron Saints of his disastrous cause. I am sure Nietzsche wouldn’t have minded — nihilism masquerading as materialism is right up his alley — but I am not sure how Feynman and Von Neumann would have felt, the former explicitly rejecting to work on the hydrogen bomb. Edward Teller would have been a much better ideological fit — nuking Alaska seems to be right up the Techno-Optimists' alley — but then again I doubt they are self-aware enough to have the person who was the likely inspiration for Dr. Strangelove as the face of their party.

Surprised that some airlines still ask us to stow away “large electronic devices” but keep using iPhones and tablets in airplane mode. So it’s OK to use the 13 — sorry, 12.9” — iPad Pro, but not the 11” MacBook Air? Or even smaller Chromebooks? Someone hasn’t thought this through.

October lectures of note

The first one is tomorrow, and it’s a good one!

- The Ethics of Using Large Language Models by Nick Asendorf, PhD. Wednesday, October 4, 2023 at 12pm EDT.

- Clinical Center Grand Rounds: Pericles and the Plague of Athens by Philip A. Mackowiak, MD, MBA. Wednesday, October 11, 2023 at 12pm EDT.

- Understanding and Addressing Housing Instability for Cancer Survivors by Angela E. Usher, PhD, LCSW, OSW-C and Brenda Adjei, MPA, EdD (and no, I don’t know what most of those acronyms mean). Tuesday, October 17, 2023 at 2pm EDT.

- WALS lecture: The Lives of Bacteria Inside Insects by Nancy A. Mora, PhD. Wednesday, October 25, 2023 at 2pm EDT.

- Reading Remedy Books: Manuscripts and the Making of a National Medical Tradition by Melissa B. Reynolds, PhD. Thursday, November 2, 2023 at 2pm EDT. And yes, I know it’s in November, but I likely would have missed it for the next post since it is so early in the month.

Everything is hi-tech and no one is happy

Emily Fridenmaker, who is a pulmonary disease and critical care physician, writes on X:

Everything is so complex.

Logging into things is complex, placing orders is complex, figuring out who to page is complex, getting notes sent to other doctors is complex, insurance is complex, etc etc. But we just keep doing it.

At what point is all this just too much to ask?

There are a few more posts in that thread, and I encourage you to read all of it to get a sampling of why doctors feel burnt out. Whether you are in medicine, science, or education, your professional interactions have slowly — They Live-style — been replaced by a series of fragile Rube Goldberg machines that worked great in the minds of their technocratic developers, but break, stutter, stammer, and grind to a halt as soon as they encounter another one of their brethren. Which is all the time!

Too much of our professional lives has been spent playing around with a series of Rube Goldberg nesting dolls, Before reading I Am a Strange Loop I would have apologized for mixing metaphors, but this is how our brains think and it doesn’t have to make sense in the physical world to be useful, so apology rescinded. 2FA inside a 2FA, and if Apple is wondering why people are taking more and more time to replace their aging iPhones, I bet a good chunk of them dread doing it because they don’t even know how many different authenticating services, email clients, education portals, virtual machines — and all other needless detritus sold to management by professional salespeople — they would need to log back into.

Don’t get me wrong: Rube Goldberg machines are fun to play with — The Incredible Machine was one of my first gaming memories — and they can even be useful for individual workflows. But mandating that others use your string-and-pulley concoction that will break at first unexpected interaction is sadistic. Just this Monday we had yet another AV failure at a weekly lecture held at a high-tech newly-opened campus. I knew there would be trouble the moment I saw that the only way to interact with any AV equipment was via a touchscreen that had no physical buttons and no way to remove the power cord, which was welded to the screen on one end, and went into a closed cabinet on the other. Lo and behold the trouble came not two weeks later: we couldn’t get past the screensaver logo. We ended up asking students to look at their own screens while guest lecturers were speaking — and nowadays everyone carries at least two screens with them to school — which was too bad, because I was looking forward to using the whiteboard which is as far from Rube Goldberg as it gets.

Me from 20 years ago would have salivated for that much technology in my everyday life, but I’m hoping it was a function of the time, not of my age, and that kids-these-days know better. My own kids' experience with the great remote un-learning of 2020–2021 makes me hopeful that they will be more cautious about introducing technological complexity into their lives.

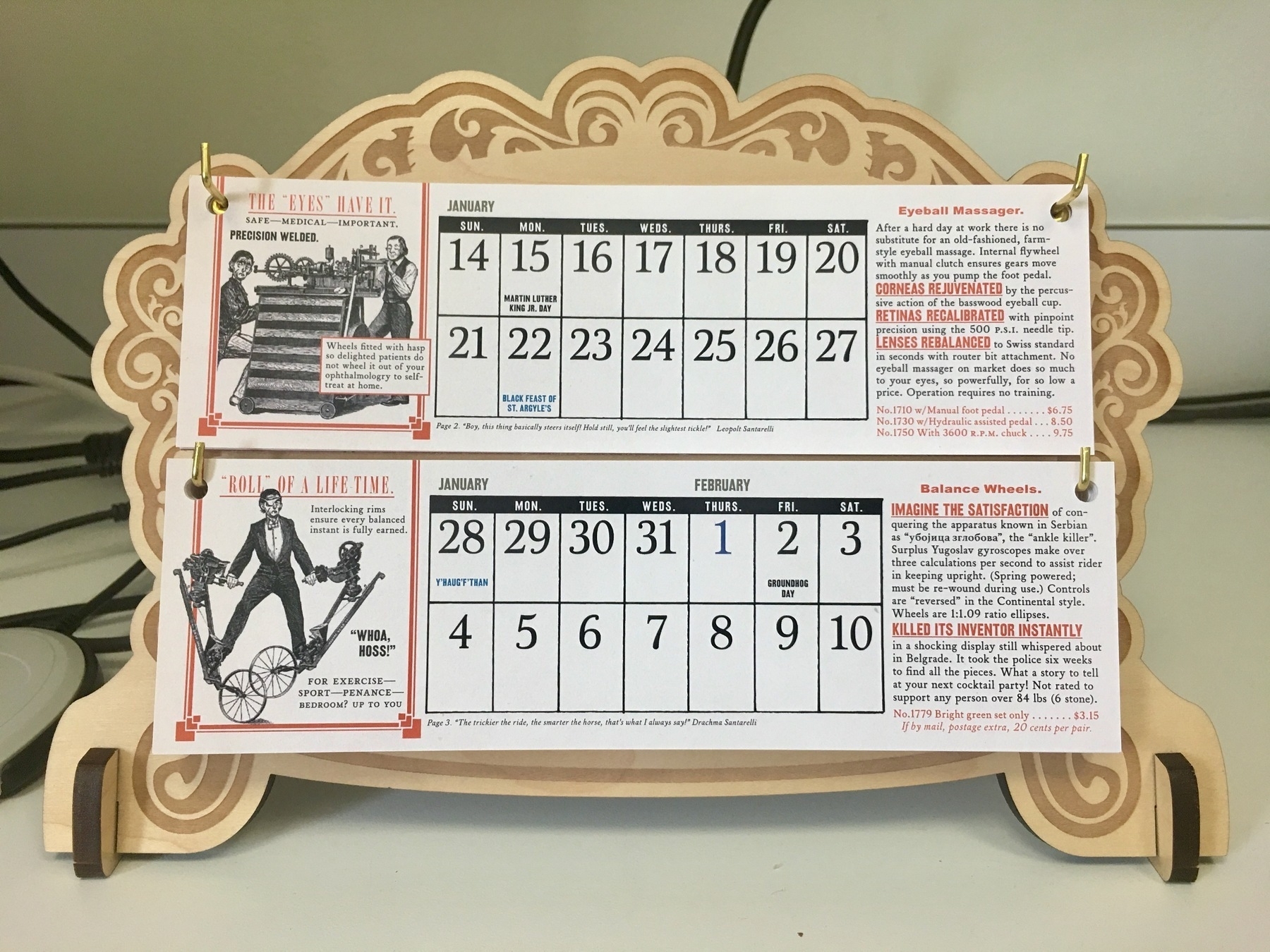

The Wondermark Calendar was great while it lasted. The 2018 edition had some strange ideas about what constituted a workout in Serbia.

The Wondermark Calendar for January 2018. Note the text on the bottom right.