🎙 A plug for the most recent ATP podcast special, After Apple. Yes, my frustration with the company has grown since reading about their dubious business practices and I typing this from Asahi Fedora to which my M1 Air can now dual-boot. But mostly I am just fascinated by the speed with which the ATP trio took up my suggestion.

I did not and do not expect any of them to quit Apple any time soon, and for reasons they stated, but it sure made for a fun discussion. If I had one nit to pick it was that they did not identify the company’s exposure to and reliance on China as a risk. The whole fragile supply chain that Tim Cook created under the mantra that inventory was evil could be gone in a gunboat flash.

📚 Finished reading: "Dreaming in Code" by Scott Rosenberg

Dreaming in Code is the story of the first three years in the life of the ultimately doomed Chandler, a project that started as a larger-than-life rethink of how computers handle information and ended up as an open-source desktop calendar client at a time when mobile and web apps started taking over the world. In that it was quite similar to the story of Vertex which, admittedly, had a much better financial outcome for those involved.

Rosenberg managed to tick a lot of my personal interest boxes, from handling big projects through discussing the rise of David Allen’s Getting Things Done to talks of recursion and Douglas Hofstadter’s strange loops. He ends the book with a reminder of the very first Long Bet made in 2002 between the man behind Chandler, Mitch Kapor, and the anti-humanist Raymond Kurzweil, that a machine will pass the Turing test by 2029 which it apparently has last year, four before the deadline, though after reading Kapor’s rationale for betting against one realized he didn’t quite know what the test was actually about.

But I digress. Some of Chandler’s initial promise of universal notes and inherited properties lives on in Tinderbox and it is no coincidence that I first learned about the book from its creator Mark Bernstein. Truly shared calendars and being able to edit a meeting that someone else created is no longer a pipe dream but a table-stakes feature of every calendar service. No one thinks too hard about syncing because the Internet is everywhere and everything is on the cloud. I shudder to think how many person-hours the developers of Chandler spent thinking about these, and for nothing.

By coincidence I am typing this from Barcelona, a 15-minute walk from Basílica de la Sagrada Família which began construction in 1882 and is expected to be completed this very year. It had to survive Spanish Civil War and two World Wars, and at the end of it all it will be more of a tourist attraction than a place of worship, a European version of the Vegas Sphere. Such is the fate of grand ambitions.

A tale of two economy Substacks

Here is Sebastian Galiani on the concept of marginal revolution: ᔥTyler Cowen on his blog, Marginal Revolution

In the 1870s, almost simultaneously and largely independently, three economists overturned classical political economy. William Stanley Jevons, Carl Menger, and Léon Walras broke with the Ricardian tradition that explained value through labor, costs, or embedded substance. Value, they argued, does not come from the total amount of work put into something. It comes from the last unit—from what economists would soon call marginal valuation.

This was not a semantic tweak. It was a change in how economic reasoning itself works.

If those last four sentences triggered you it is for good reason, because there is more:

We see microeconomics arguments based on levels instead of changes, on identities instead of incentives, on stocks instead of flows. We see decisions justified by who someone is rather than by what happens at the margin. We see calls to preserve structures because they exist, to freeze allocations because change feels uncomfortable, to judge outcomes by averages rather than by trade-offs.

From a marginalist perspective, these arguments are not just wrong; they are incoherent.

And so on. To be clear, this is AI slop plain and simple and is identified as such in one of the top Marginal Revolution comments. It also has 38 likes, 7 reposts and 7 comments on Substack, none of which recognize that much of the text came from a Large Language Model.

Sebastian Galiani doesn’t have a Wikipedia page, but here is the first paragraph of his academic biography:

Sebastian Galiani is the Mancur Olson Professor of Economics at the University of Maryland. He obtained his PhD in Economics from the University of Oxford and works broadly in the field of Economics. He is a member of the Argentine National Academy of Economic Science, and Fellow of the NBER and BREAD. Sebastian was Secretary of Economic Policy, Deputy Minister, Ministry of Treasury, Argentina, between January of 2017 and June of 2018.

Lovely.

As a palate cleanser, here is an early 2000s article about inequality that the economist Branko Milanović recently re-published on his own Substack:

Many economists dismiss the relevance of inequality (if everybody’s income goes up, who cares if inequality is up too?), and argue that only poverty alleviation should matter. This note shows that we all do care about inequality, and to hold that we should be concerned with poverty solely and not with inequality is internally inconsistent.

That is only the first paragraph. The text is not easily summarized or excerpted but it is a wonderful read with which I very much agreed and which only got better and more relevant as years went by. Here are the first two paragraph of Milanović’s Wikipedia page:

Branko Milanović (Serbian Cyrillic: Бранко Милановић, IPA: [brǎːŋko mǐlanoʋitɕ; milǎːn-]) is a Serbian-American economist and university professor. He is most known for his work on income distribution and inequality.

Since January 2014, he has been a research professor at the Graduate Center of the City University of New York and an affiliated senior scholar at the Luxembourg Income Study (LIS). He also teaches at the London School of Economics and the Barcelona Institute for International Studies. In 2019, he has been appointed the honorary Maddison Chair at the University of Groningen.

Milanović has 8 mentions on Marginal Revolution, including a 2015 post in which Cowen recognizes him as his favorite Serbian economist and blogger. But maybe he fell out of favor, as the last link from Cowen to anything of Milanović’s was back in 2023 (and the one before was in 2020, from Alex Tabarrok, who disagreed with what Milanović wrote about comparing wealth across time periods).

Galiani has 10 mentions, the most recent before this AI slop being his NBER working paper in which he and his co-authors measured efficiency and equity framing in economics research using, yes, LLMs. I actually don’t have a problem with such papers since the LLM use is clearly identified. But selling an LLM’s voice as your own is a different matter altogether and would deserve a posting to the academic Wall of Shame if there was one.

By the way, this is what students at the University of Maryland have to do per UMD guidelines (emphasis mine):

Students should consult with their instructors, teaching assistants, and mentors to clarify expectations regarding the use of GenAI tools in a given course. When permitted by the instructor, students should appropriately acknowledge and cite their use of GenAI applications. When conducting research-related activities (e.g., theses, comprehensive exams, dissertations), students should refer to the guidance below for research and scholarship. Allegations of unauthorized use of GenAI will be treated similarly to allegations of unauthorized assistance (cheating) or plagiarism and investigated by the Office of Student Conduct.

Everything on Substack should be marked as LLM-generated until proven otherwise, and increasingly anything Cowen links to as well.

Thursday follow-up, on sensemaking and productivity

Last month I linked to two things that are now worth following up on:

- John Nerst’s book “Competitive Sensemaking” is out. The only non-Amazon option is an ebook, so I will leave this one for the Daylight tablet.

- Steven Johnson’s NotebookLM project “Planet Of The Barbarians” is also live, accompanying the newsletter series of the same name. Even more interesting to me are [the notebook][3b] and [newsletter post][3c] titled “The Architecture of Ideas”, referencing Johnson’s work on tools and workflows for writing. Warning: both are full of rabbit holes.

And on the abandoning Apple front:

- Matt Gemmell has concerns about Apple much better baked than my own. He also has thoughts on detaching but seems less willing to give up on the ecosystem than I am. (ᔥJohn Brady)

- My own toe in the Apple-less pool is giving up on the essential Mac-only apps. OmniFocus was the first on the chopping block, replaced by Emacs org-mode, though instead of going through now pretty dated tutorials behind that link I just asked Google Gemini how best to convert Kurosh Dini’s Creating Flow with OmniFocus into Org. And it worked! The idea is be to keep replacing apps with open-source equivalents until making the switch becomes easy. It will probably take years but you have to start somewhere.

Tuesday links, only positivity allowed

- Technology Connections on YouTube: You are being misled about renewable energy technology.

- Silje Grytli Tveten: Pretty soon, heat pumps will be able to store and distribute heat as needed

- Das Surma: Ditherpunk — The article I wish I had about monochrome image dithering

- Christopher Schwarz: Free Now & Forever: ‘Campaign Furniture’

- Doug Belshaw: The strange magic of the third week

OK, these two are included more for saliency than positivity, but they are also good!

- Michael Lopp: I Hate Fish. Because I am in the middle of a gtd identity crisis

- Akash Bhat: Curate or die. Which is about this very post, and those like it.

Update: Adam Mastroianni’s latest post fits here like a glove.

Monday links, slopocalypse edition

- Benj Edwards for Ars Technica: New OpenAI tool renews fears that “AI slop” will overwhelm scientific research. The writing has been on the wall for at least a year, but the capitulation of the academic publishing complex is shockingly fast even taking into account its rotten core.

- Tyler Kingkade for NBC News: To avoid accusations of AI cheating, college students are turning to AI. The article is even worse than the headline: professors and teaching assistants are the ones turning to AI to tell them whether a student used AI in their work, and will not accept any evidence to the contrary. To repent, you need to take a class on writing with integrity and write an apology. Something tells me the younger generations will not be kind to LLMs.

- Cal Newport: The Dangers of “Vibe Reporting” About AI. Newport flags vague reporting which attempts to associate AI adoption with productivity gains that lead to job loss. In Enshittification, Cory Doctorow uncovers this maneuver for what it is: a ball under cup scam that relies on our not paying attention.

- Simon Willison: Moltbook is the most interesting place on the internet right now. It is interesting in the same way reading about major pileups is interesting. And maybe we can even learn something from them! But I’d rather they didn’t exist because exposure to the phrases in AI-generated text raises my blood pressure.

- Rohit Krishnan: Epicycles All The Way Down. I became wary of all long texts on Substack, X LinkedIn and the like, but I trust this one about the way LLMs may or may not reason to be largely written by a human and the sections that aren’t are explicitly called out.

- Andrew J. Cowan for ASH Clinical News: Imagining the Ideal AI Partnership in Hematology. Good to see a fellow hematologist explicitly call out Doctorow’s reverse centaurs as something to be avoided at any cost. And yes, I have added the relevant book to the pile.

- On the off chance you understand Serbian, this week’s episode of Priključenija also fits the theme.

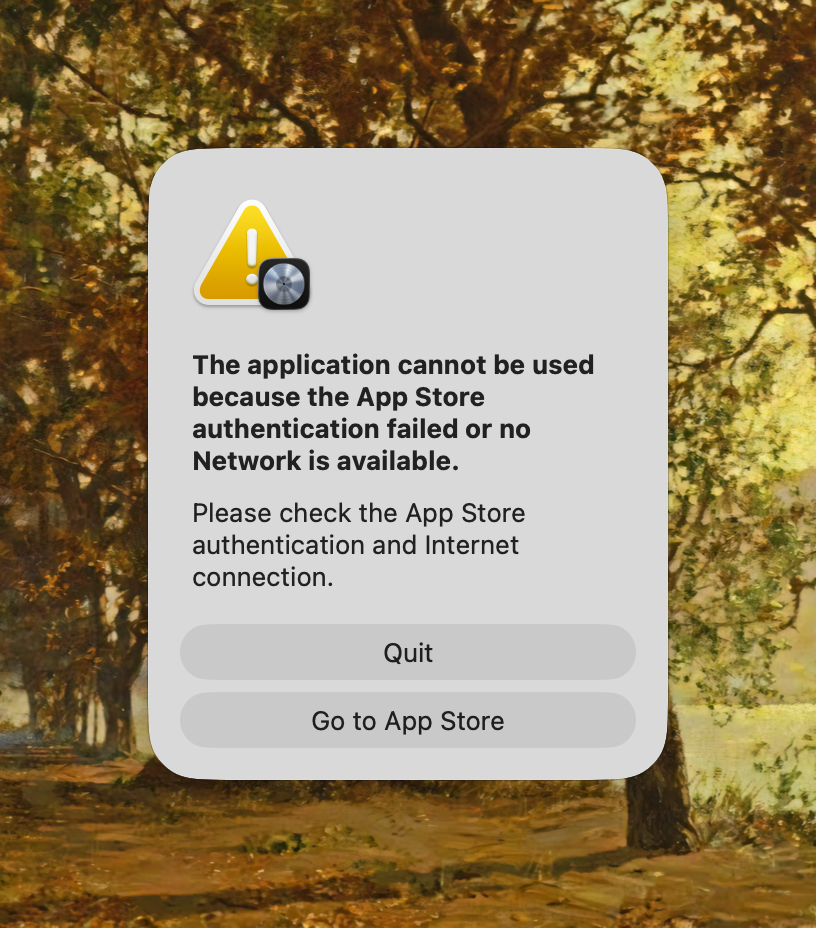

Today I learned that I paid $200 for audio editing software I can only use while online, which is tough to do when 37,000 feet up in the air. I don’t think this was an issue prior to the latest round of enshittification.

An update on my recent Internet browser use:

ZenOrion- Vivaldi

Speed wins.

Wednesday links, in which we say goodbye to the last remnants of the 20th century

- Lily Lynch for The Ideas Letter: Cold War Fixations.

Book reviews make for great essays, particularly when the reviewer vehemently disagrees with the author’s main premise. The author here is Michael McFaul, a 1990s style liberal democrat who, much like his neoliberal counterparts can’t see that his project failed and therefore cannot even conceive of taking responsibility for that failure. Lynch takes him to task.

- Andrej Karpathy on X: A few random notes from claude coding quite a bit last few weeks.

Where the reliably sensible Karpathy provides an update on how he uses LLMs for programming and, well ᔥTyler Cowen:

Slopacolypse. I am bracing for 2026 as the year of the slopacolypse across all of github, substack, arxiv, X/instagram, and generally all digital media. We’re also going to see a lot more AI hype productivity theater (is that even possible?), on the side of actual, real improvements.

Of course, I would have named it slopocalypse instead of slopacolypse but, you know, potato potatoe.

- Bogdan-Mihai Mosteanu: From Microsoft to Microslop to Linux: Why I Made the Switch.

Both Windows and and MacOS have become sufficiently sloppy that people are looking for an exit. This will be the decade of Linux, and it already started with the Steam Deck about which I haven’t written anything here but have discussed briefly in a podcast (Serbian only).

- Bryan Vartabedian: Three Adaptive Stances of Doctors.

Scenarios on how physicians may respond to recent developments, with a Focus, Fight, or Build phenotype. At a glance it may look like the Build phenotype may be the “correct” one, but of course Vartabedian correctly points out that these people may soon enough become bullshit artists themselves. These are my words, not his. Dr Vartabedian was much more measured:

The problem I find is that a lot of builders aren’t in the trenches for long. They move into startups or administrative positions. And as they evolve, their view of medicine becomes fixed. And when you’re not struggling with the realities of an inbox, you begin to solve for a world that doesn’t exist.

This is something I also noticed, many years ago.

- @AutismCapital on X: Severus Snape - ALWAYS (LIVE at Hogwarts) 🔥🔥🔥

An LLM-generated music video for millennials ᔥKevin Kelly which is getting a lot of attention because of course the quick cuts and incoherence of Sora and others are perfect for the medium. This is why people thinking that MTV shut down when it actually didn’t was so salient: its former viewers are being made to think that everyone will soon enough be spinning their own music videos set to their own (kind of) music.

The many flavors of enshittification

There is a big winter storm threatening most of the continental US, and as any pair of overworked parents with an infant would do, my wife and I waited until a few days before snow piled on DC to get our other kids some new cold-weather boots.

We decided to shop in person rather than on Amazon because: 1) their feet are growing so fast that we have no idea what size boot they would be wearing for a particular model and 2) we had no time to try on a pair and return if the fit was bad, because the snow is coming in, like, less than 24 hours. My wife, having kept some contact with brick-and-mortar stores, suggested we went to the closest large shoes-only stores despite there being a Macy’s, a Nordstrom and a few other general department stores within walking distance from us, because apparently those were’t what they used to be. Okie dokie.

And so we took the green line to DC USA, a “multilevel enclosed urban shopping center anchored by big box stores”, most of which had closed since the mall’s heyday but we both remembered there being a DSW just around the corner and how could it have possibly gone out of business when its nearby competitor went bust shortly after we moved to DC? I think you can see where this is going: DSW too closed its DC locations for good early last year so we “kissed the door” as they say.

But there was a Target in the same building, and a Marshalls, and even something that used to be called “Burlington Coat Factory”. Surely between the three we would find a decent selection of children’s winter boots in the middle of January. Not a chance, said my wife who is wise and knowledgable in the ways of shopping, I write this without wanting to promote any stereotypes because we both in fact hate shopping, but it is all a matter of degrees and she hates it slightly less than I do. and I didn’t trust her but I should have because I did go into the Target remembering it as the Target of my youth — well, late 20s — when I had just come to America and its shelves along with those of Wallmart seemed to stretch into infinity.

My friends, the kids shoe shelf of this particular Target did not stretch into infinity. It barely stretched six feet. It had no more than three sizes of each model and no more than two models of each shoe type. Marshalls was even worse. Under the wise direction of our master shopper we didn’t even walk into the Burlington.

Which is to say, before online services began enshittifying, they had already spurred self-enshittification of the offline world. Cory Doctorow may have intended enshittification to signify the four-stage worsening of online services under the squeeze of financialization, but Doctorow himself in the book and elsewhere welcomed the broader use of the term which is what I’m doing here and if you want to be pedantic call if “self-shittification” instead. There is an economy/sociology/other “soft” science paper there somewhere in describing the stages of offline enshittification. Put it together with online and you get a PhD in enshittology. Big department stores have been hollowed out, along with big book stores, big electronics, big toys, big airlines, and anything else big that depended on volume and not personal relationships and brands. Like all bad things, this happened slowly then suddenly: trying to outcompete the cloud businesses, they themselves raised a toe up to the cloud. Note how the de-enshittifcation of big box stores involves a more personal approach. Those who can pivot to it will survive. But being a cloud platform is like pregnancy — you either are or are not — and they were clearly not.

Effects of the cloud on everyday life are particularly salient now that I’ve read Technofeudalism Though I maintain that “cloudism” is the better term and will use it instead. so this may be overstating the matter, but the broadest correct use of “enshittification” could be to describe the negative effects of cloud platforms becoming the dominant form of ownership, the thing that people need to have in order to become the movers and shakers of other people’s lives. For most of human history “the thing” was land. For the last few hundred years it was manufacturing capital. And now it’s this.

Each time, it made sense to sacrifice some of the base layer to build up and up. Yes, food was still important but even more important was the capital, so raze those fields of wheat and corn to erect factories. The famine will be worth it in the long run, for the survivors.

This is one big way in which cloud platforms have ruined meatspace. Digishittification!Another one is the digitization of what should be simple tasks: from AV equipment at a university to digital homes. This is different from what happened to big box stores as it is not a self-inflicted response to cloudism but rather the effect of cloudists trying to impose themselves further onto meatspace and expand their domain to an ever-increasing number of objects and interactions. Heated seats as the horse armour of our new age, though even Bethesda didn’t have the gall to ask for recurring payments.

The third and personally most irksome form is the enshittification of our inner lives. Lifeshittification! I have spent the last hour recounting our family’s voes of shoe shopping instead of cooking brisket. My conversations increasingly revolve about bad things that are happening, the reasons why they are happening, and the ways to prevent them from happening. More often than note this means: Teams is junk; Microsoft is a junk company; try to get Microsoft out of your life even if it means changing your kids' schools. Attention is now the commodity that land and capital used to be, and the cloud platforms have most of my attention. So is there even a way to win this fight?