Thursday links, AI and immigration

- Chris Arnade: What Holds America Together?

The best description of the conundrum America is in and the future of the American dream I have seen, accounting for the difference between “thin” (superficial) and “thick” culture. Good bit about immigration too:

Our tolerance for thin differences is also why immigration works better here than in other countries. That is especially true of front-row immigrants (highly educated), since they are leaving cultures they didn’t fit into at a thick level (entrepreneurial). They have self selected for being a natural American, at a thick level.

My own thoughts about and experience with immigration and the American dream match the above.

- Jessica Hullman: Living the metascience dream (or nightmare) with AI for science.

AI begets AI, as previously noted. Papers will be “safer”. Nuance will be lost. The comments to the post are just as enlightening.

- Paris Marx: Sam Altman’s anti-human worldview.

No nuance here either. Altman is so unabashedly anti-human that any of his public appearances are right out of a CS Lewis essay or story.

- Terry Godier: Current.

A new RSS reader ↬John Gruber which I am yet to check out. I do like the idea behind it, which tries to tame Dave Winer’s river of news a full two decades after he described the concept. I am less enthusiastic about the website copy: there is so much of it, and it is written in just such a way that it smells strongly of LLM assistance. I don’t think I mind it that much — though my skin still crawls when I see a “not this but that” phrase — and will chalk it up to the font-overload era of 1990s computing when we were just figuring out how to use the many typefaces available.

Monday links, science, technology and cults

- Rebecca Robbins and Gina Kolata for the NYT: Grail’s Cancer Detection Test Fails in Major Study. Some of my earliest tweets — now protected, so apologies for linking if you haven’t already followed me — were about Grail and why their project to screen healthy people for early signs of cancer would likely fail. Nine years and billions of dollars later…

- Peter Bonate: Drugs Explained. A layperson’s primer on drug development from a pharmacologist, available on GitHub.

- Colin Gorrie: How far back in time can you understand English?. One thousand years of the English language in one blog post.

- Joel Hawksley: How I built Timeframe, our family e-paper dashboard. It was quite the journey, interrupted by the Boulder County Marshall Fire which burned down more than a thousand buildings in late 2021. “On the night of December 31–January 1, heavy snowfall put an end to the fire.”

- ConchCat: I accidentally ate lunch with a cult. The cult in question is Twelve Tribes, accused of exploiting its followers as free labor, along with assorted abuses of women and children. But the sandwiches were good!

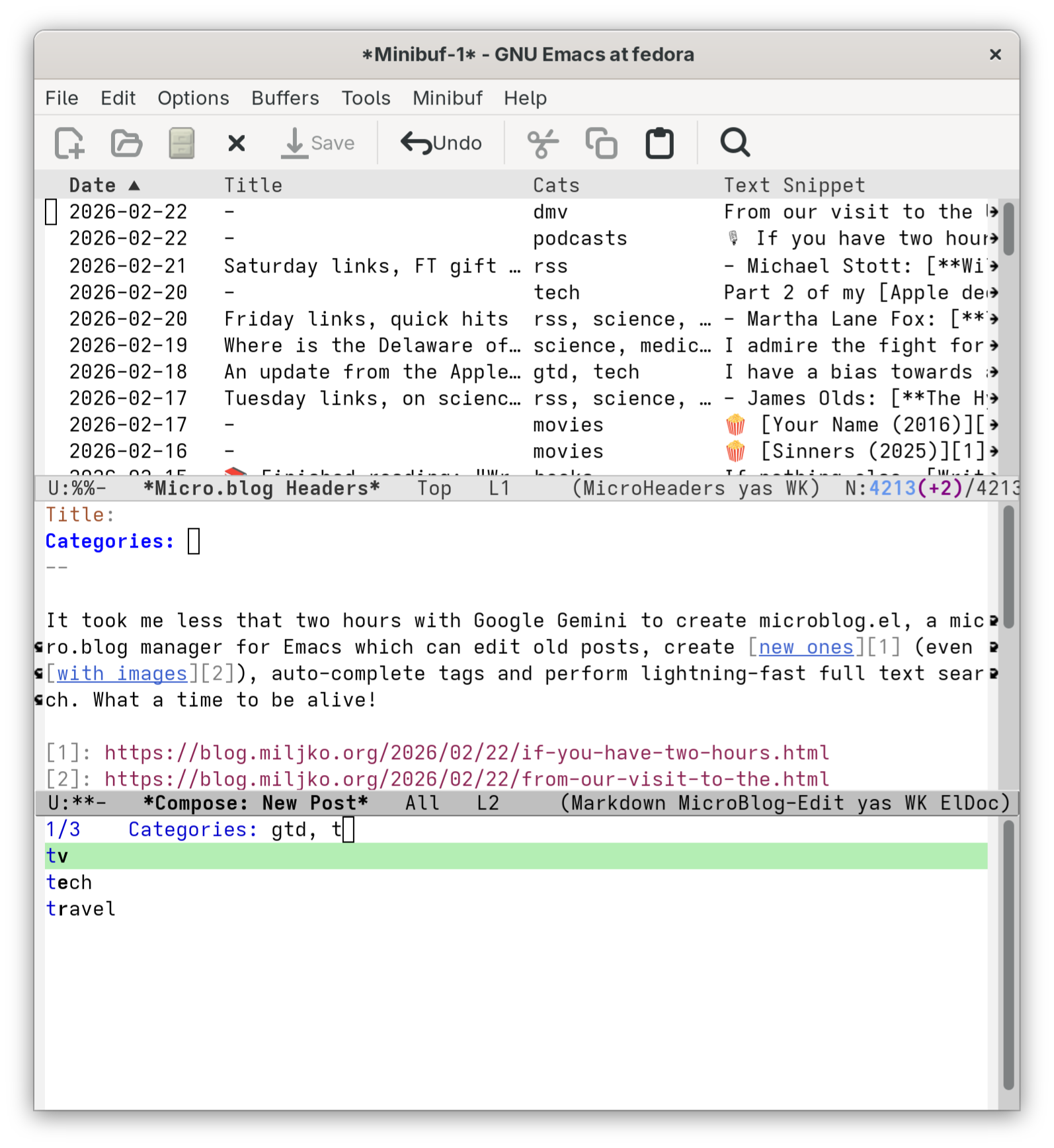

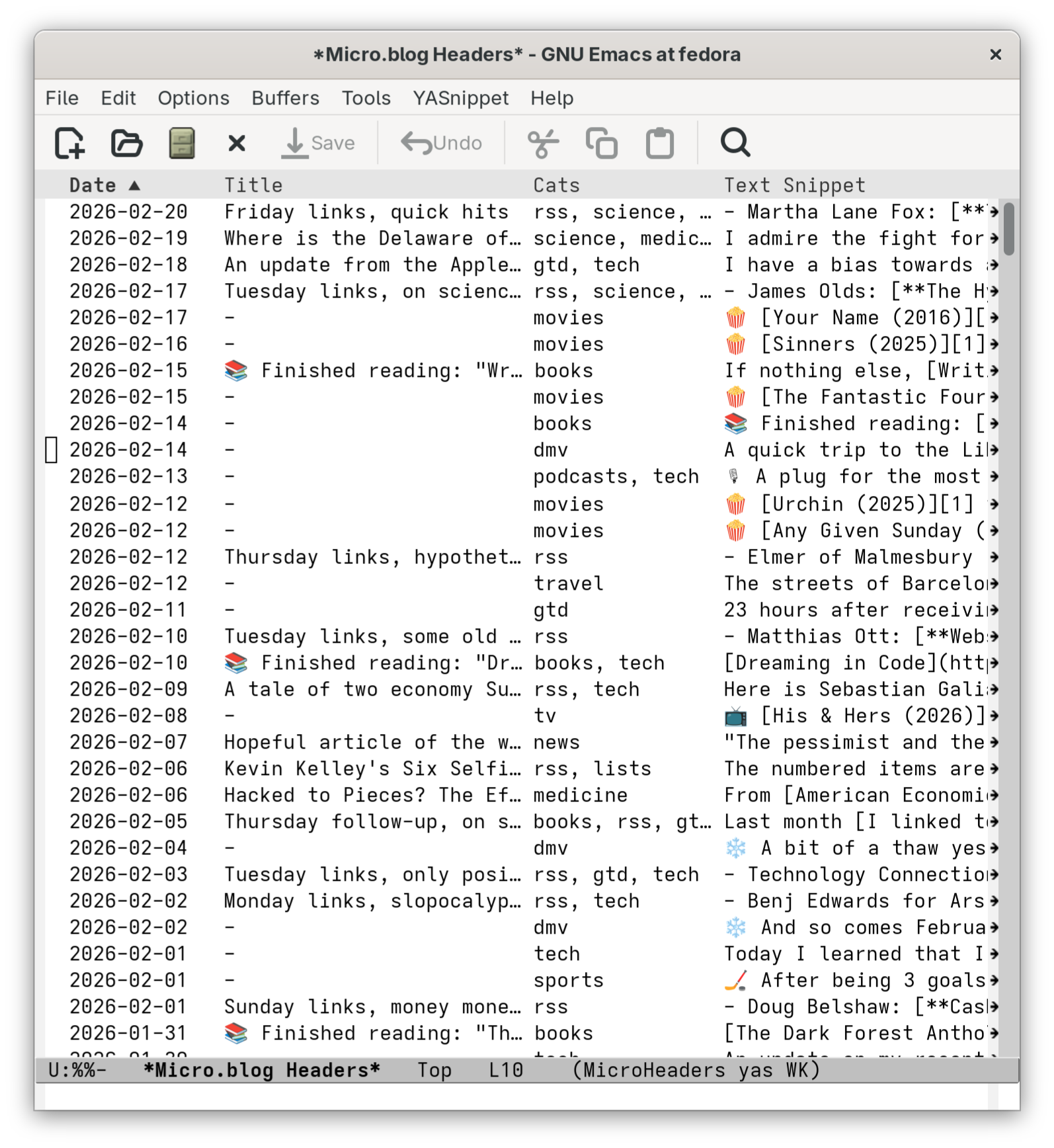

It took me less that two hours with Google Gemini to create microblog.el, a micro.blog manager for Emacs which can edit old posts, create new ones (even with images), auto-complete tags and perform lightning-fast full text search. What a time to be alive!

Part 2 of my Apple decoupling is not ready just yet, but I couldn’t wait to share this preview of my (and Google Gemini’s) micro.blog editing client. I am writing this in the browser as image attachment is not yet fully baked, but it can download and edit existing posts just fine.

Emacs FTW!

Friday links, quick hits

- Martha Lane Fox: The Price of initiative just collapsed. On the lag between invention and implementation, from printing press to AI.

- Scott Shambaugh: An AI Agent Published a Hit Piece on Me. A taste of what’s possible, with follow-up.

- Steve Blank: You Only Think They Work For You. About contracted service providers. The article is about public relations, but applies just as well to contract research organizations (CROs) and these complex dynamics are part of the reason clinical trials are so expensive.

- Andrew Gelman: The 80% power lie. A clear example of why frequentist statistics, so favored by conservative regulators, institutional review boards and scientific review committees, are more often than not based on false assumptions.

- Dr. Drang: Chinese New Year and Ramadan. The pseudonymous doctor often shifts between programming and scripting languages, from R through Python to Mathematica which coincidentally matches my own amateur programmer journey. For this post he used Emacs Lisp and I am very much interested in Emacs and Lisp right now due to recent experimentation with Linux. Saliency FTW.

An update from the Apple decoupling: OS

I have a bias towards action, so when an idea forms with a clear path forward and little if any downside I tend to go for it. Now, the plan to detach from Apple will take years to fully implement, but stage 1 is well under way: to find and use workable Linux parallels to every app I’ve come to learn and love over the years. Some of those I have already replaced (goodbye, OmniFocus, hello, Emacs org-mode) and some are still a work in progress (you won’t believe what will end up replacing MarsEdit), but before all that here are a few things about replacing the OS.

I was worried that I would be lost between having to choose between various distributions of Linux, each with its own set of trade-offs, but having an M1 Macbook Air significantly limited my choices which in this case was a good thing. Asahi is a project to bring Linux to Apple Silicon chips and so far M1 and M2 series are almost fully supported. And Asahi chose Fedora as its flagship distrbution, so Fedora Asahi Remix was the obvious choice, though several other distribution since then have become available on Apple Silicon thanks to Asahi.

Still, there were two more choices to make: what desktop environment (KDE Plasma or GNOME), and how to actually run the thing (via Parallels or actual dual-booting). It seems like the Asahi people would want me to chose KDE — it was the default choice during setup and they highlight it on the Fedora Asahi page. Alas, it just looked to much like Windows and the configurability they touted as a feature also gave me pause: how much fiddling would I do as a procrastination mechanism? GNOME looked sort-of like MacOS but was clearly its own thing and dare I say was even more polished than Liquid Glass. So I picked GNOME.

As for the booting mechanism, Parallels or some other method of virtualization would 1) have been a cop-out, 2) still have me exposed to the disaster that is MacOS 26 Tahoe, and 3) not be representative of the actual experience once the M1 Air kicks the dust and I have to find a new laptop. So I dual booted. Fedora Asahi makes this incredibly easy, with a single incantation at the Terminal shrine:

curl https://alx.sh | sh

This downloads the entire thing, partitions the drive, installs the new OS via a MacOS Recovery drive (don’t ask me how this works, but it work it did) and changes the boot sequence to default to Linux. It would have been magic if not for the partitioning part, during which I found out that no I do not actually have 300+ Gb of free space on my 1 Tb SSD as MacOS doesn’t count the space used by temporary and cash files as occupied and would rather users don’t know about them at all.

Fortunately there is DaisyDisk which was one of my first Mac App Store purchases back in 2012, only the App Store version also doesn’t have access to these hidden files because why would people know what is on the hardware they paid for, so I had to re-purchase the app from the developer’s website. On one hand no harm no foul — I’ve had and use the software for more than a decade — but on the other this kind of shenanigans is exactly why I’m skipping Appletown.

So if I had to order how much time things took: partitioning was the longest and most tedious part owing completely to Apple’s opaqueness, writing this section comes after that, and the actual Fedora Asahi install was by far the quickest. The last time I dealt with Linux before this was when I installed Ubuntu Gutsy Gibbon back in 2008 to dual-boot with Windows XP or what not (I was never a Linux maximalist), and oh my how things have changed.

A few things you should know before you type the incantation in your own terminal: Thunderbolt is not supported so no chance of a second display unless you find a Linux-compatible dock; touch ID doesn’t work and I doubt that it ever will; sleep mode is not fully baked so if your workflow involved leaving the laptop on for days relying on power management magic you should be prepared to switch back to turning the thing off at the end of a work day, which hey may actually not be a bad thing if it makes you less tempted to just take a quick peek at the work email during a movie watching night bio break, right?

The first one was almost a deal-breaker for me since I have grown attached to the LG 5K Ultrafine display, but everything else brought enough relief — even joy — for me to stick to the program. Now, for the Linux apps that brought joy back into my laptop use you will need to come back later this week, as this post has already gotten longer than planned. So it goes when you’re having fun.

Tuesday links, on science, medicine, technology and a bit of something extra

- James Olds: The Hypothesis Trap.

Why no scientist should hang their hat on a single pet theory, with real-world examples. The same problem haunts the world of biotech even as its denizens claim their superiority at drug development.

- Bryan Vartabedian: AI Isn’t Ready for Your Patients.

About a recent Nature Medicine article which found that LLMs were no better than Google at helping patients diagnose and manage their self-reported maladies. The reasons are those that I suggested two and a half years ago — ChatGPT can give you the correct answer from a properly structured clinical vignette, but the art and science of medicine are transferring the reality in front of you — the patient’s haphazard story, their hodge poge of medical records, the subtle physical exam findings — into something salient. Not saying AI won’t get there at some point, but it clearly still needs work.

- Venkatesh Rao: vgr: The Twitter Years (2007-22).

Rao has collected 101 (!?) of his best Twitter threads and a few hundred single tweets into a book. A note on the title page says:

This book is LLM-friendly. Point your LLM to venkateshrao.com/twitter-book if you want it to explore it. A full interactive archive, explorable via an AI oracle, is under development.

Living up to his call to be (slightly) monstrous.

- Kriston Capps and Marie Patino for Bloomberg: Inside the Plan to Demolish and Rebuild a Swath of Trump’s Washington.

Yes, it is a person I hate making a good point, which is that the brutalist architecture of L’Enfant Plaza is out of place so close to the National Mall and should be kept where it belongs. I even prefer the proposed neoclassicist style to what Trump’s ego would want, which I imagine to be a Dubai And even Dubai would be better than what’s in the President’s id. on the Potomac.

-

Cabel Sasser: Wes Cook and The McDonald’s Mural. Sasser expands on his wonderful 2024 XOXO talk about a 10-year quest, which you should of course watch before reading.

-

Luke Bouma for Cord Cutters: Babylon 5 Is Now Free to Watch On YouTube. Could it be true? Would the badly run and technologically incompetent Warner Brod Discovery really commit this act of unprovoked altruism on the official Babylon 5 Youtube channel? Of course not — as of today the uploaded pilot is set as private.

🎙 A plug for the most recent ATP podcast special, After Apple. Yes, my frustration with the company has grown since reading about their dubious business practices and I typing this from Asahi Fedora to which my M1 Air can now dual-boot. But mostly I am just fascinated by the speed with which the ATP trio took up my suggestion.

I did not and do not expect any of them to quit Apple any time soon, and for reasons they stated, but it sure made for a fun discussion. If I had one nit to pick it was that they did not identify the company’s exposure to and reliance on China as a risk. The whole fragile supply chain that Tim Cook created under the mantra that inventory was evil could be gone in a gunboat flash.

📚 Finished reading: "Dreaming in Code" by Scott Rosenberg

Dreaming in Code is the story of the first three years in the life of the ultimately doomed Chandler, a project that started as a larger-than-life rethink of how computers handle information and ended up as an open-source desktop calendar client at a time when mobile and web apps started taking over the world. In that it was quite similar to the story of Vertex which, admittedly, had a much better financial outcome for those involved.

Rosenberg managed to tick a lot of my personal interest boxes, from handling big projects through discussing the rise of David Allen’s Getting Things Done to talks of recursion and Douglas Hofstadter’s strange loops. He ends the book with a reminder of the very first Long Bet made in 2002 between the man behind Chandler, Mitch Kapor, and the anti-humanist Raymond Kurzweil, that a machine will pass the Turing test by 2029 which it apparently has last year, four before the deadline, though after reading Kapor’s rationale for betting against one realized he didn’t quite know what the test was actually about.

But I digress. Some of Chandler’s initial promise of universal notes and inherited properties lives on in Tinderbox and it is no coincidence that I first learned about the book from its creator Mark Bernstein. Truly shared calendars and being able to edit a meeting that someone else created is no longer a pipe dream but a table-stakes feature of every calendar service. No one thinks too hard about syncing because the Internet is everywhere and everything is on the cloud. I shudder to think how many person-hours the developers of Chandler spent thinking about these, and for nothing.

By coincidence I am typing this from Barcelona, a 15-minute walk from Basílica de la Sagrada Família which began construction in 1882 and is expected to be completed this very year. It had to survive Spanish Civil War and two World Wars, and at the end of it all it will be more of a tourist attraction than a place of worship, a European version of the Vegas Sphere. Such is the fate of grand ambitions.

A tale of two economy Substacks

Here is Sebastian Galiani on the concept of marginal revolution: ᔥTyler Cowen on his blog, Marginal Revolution

In the 1870s, almost simultaneously and largely independently, three economists overturned classical political economy. William Stanley Jevons, Carl Menger, and Léon Walras broke with the Ricardian tradition that explained value through labor, costs, or embedded substance. Value, they argued, does not come from the total amount of work put into something. It comes from the last unit—from what economists would soon call marginal valuation.

This was not a semantic tweak. It was a change in how economic reasoning itself works.

If those last four sentences triggered you it is for good reason, because there is more:

We see microeconomics arguments based on levels instead of changes, on identities instead of incentives, on stocks instead of flows. We see decisions justified by who someone is rather than by what happens at the margin. We see calls to preserve structures because they exist, to freeze allocations because change feels uncomfortable, to judge outcomes by averages rather than by trade-offs.

From a marginalist perspective, these arguments are not just wrong; they are incoherent.

And so on. To be clear, this is AI slop plain and simple and is identified as such in one of the top Marginal Revolution comments. It also has 38 likes, 7 reposts and 7 comments on Substack, none of which recognize that much of the text came from a Large Language Model.

Sebastian Galiani doesn’t have a Wikipedia page, but here is the first paragraph of his academic biography:

Sebastian Galiani is the Mancur Olson Professor of Economics at the University of Maryland. He obtained his PhD in Economics from the University of Oxford and works broadly in the field of Economics. He is a member of the Argentine National Academy of Economic Science, and Fellow of the NBER and BREAD. Sebastian was Secretary of Economic Policy, Deputy Minister, Ministry of Treasury, Argentina, between January of 2017 and June of 2018.

Lovely.

As a palate cleanser, here is an early 2000s article about inequality that the economist Branko Milanović recently re-published on his own Substack:

Many economists dismiss the relevance of inequality (if everybody’s income goes up, who cares if inequality is up too?), and argue that only poverty alleviation should matter. This note shows that we all do care about inequality, and to hold that we should be concerned with poverty solely and not with inequality is internally inconsistent.

That is only the first paragraph. The text is not easily summarized or excerpted but it is a wonderful read with which I very much agreed and which only got better and more relevant as years went by. Here are the first two paragraph of Milanović’s Wikipedia page:

Branko Milanović (Serbian Cyrillic: Бранко Милановић, IPA: [brǎːŋko mǐlanoʋitɕ; milǎːn-]) is a Serbian-American economist and university professor. He is most known for his work on income distribution and inequality.

Since January 2014, he has been a research professor at the Graduate Center of the City University of New York and an affiliated senior scholar at the Luxembourg Income Study (LIS). He also teaches at the London School of Economics and the Barcelona Institute for International Studies. In 2019, he has been appointed the honorary Maddison Chair at the University of Groningen.

Milanović has 8 mentions on Marginal Revolution, including a 2015 post in which Cowen recognizes him as his favorite Serbian economist and blogger. But maybe he fell out of favor, as the last link from Cowen to anything of Milanović’s was back in 2023 (and the one before was in 2020, from Alex Tabarrok, who disagreed with what Milanović wrote about comparing wealth across time periods).

Galiani has 10 mentions, the most recent before this AI slop being his NBER working paper in which he and his co-authors measured efficiency and equity framing in economics research using, yes, LLMs. I actually don’t have a problem with such papers since the LLM use is clearly identified. But selling an LLM’s voice as your own is a different matter altogether and would deserve a posting to the academic Wall of Shame if there was one.

By the way, this is what students at the University of Maryland have to do per UMD guidelines (emphasis mine):

Students should consult with their instructors, teaching assistants, and mentors to clarify expectations regarding the use of GenAI tools in a given course. When permitted by the instructor, students should appropriately acknowledge and cite their use of GenAI applications. When conducting research-related activities (e.g., theses, comprehensive exams, dissertations), students should refer to the guidance below for research and scholarship. Allegations of unauthorized use of GenAI will be treated similarly to allegations of unauthorized assistance (cheating) or plagiarism and investigated by the Office of Student Conduct.

Everything on Substack should be marked as LLM-generated until proven otherwise, and increasingly anything Cowen links to as well.