An interesting entry to the big and ever-growing book of unintended consequences:

Chernobyl caused many more deaths by reducing nuclear power plant construction and increasing air pollution than by its direct effects which were small albeit not negligible.

Andrew Gelman has a new — and free — textbook out, Regression and Other Stories. From the cover:

Many textbooks on regression focus on theory and the simplest of examples. Real statistical problems, however, are complex and subtle. This is not a book about the theory of regression. It is a book about how to use regression to solve real problems of comparison, estimation, prediction, and causal inference. It focuses on practical issues such as sample size and missing data and a wide range of goals and techniques. It jumps right in to methods and computer code you can use fresh out of the box.

Between that, his Bayesian Data Analysis and many other freely available lectures and books, has there ever been a better time for high school students bored out of their minds by the pedestrian curriculum? But I am now just projecting to myself from 20-some years ago — I am sure high school students of today would rather spend time on their PS5, and my past self would probably have joined them. (↬Andrew Gelman)

Good quote today from Adam Mastroianni’s latest newsletter:

When I see someone salivating over the idea of a Science Gestapo, I have to marvel at their faith that authorities only ever prosecute guilty people.

Applies more broadly than science, of course.

Why are clinical trials expensive?

Why haven’t biologists cured cancer? asks Ruxandra Teslo in my new-favorite Substack newsletter, and answers with a lengthy analysis of biology, medicine and mathematics. Clinical trial costs inevitably come up, and I know it is a minor point in an otherwise well-reasoned argument but this paragraph stood out as wrong:

Clinical trials, the main avenue through which we can get results on whether drugs work in humans, are getting more expensive. The culprits are so numerous and so scattered across the medical world, that it’s hard to nominate just one: everything from HIPAA rules to Institutional Review Boards (IRBs) contribute to making the clinical trial machine a long and arduous slog.

What happened here is the classical question substitution, switching out a hard question (Why are clinical trials getting more and more expensive?) with an easy one (What is the most annoying issue with clinical trials?). Yes, trials involve red tape, but IRB costs pale in comparison to other payments. Ditto for costs of privacy protection.

If we are picking out likely reasons, I would single out domain-specific inflation fueled by easy zero-interest money flowing from whichever financial direction into the biotech and pharmaceutical industries, leading to many well-coined sponsors competing for a limited — and shrinking! — pool of qualified sites and investigators. It is a pure supply-and-demand mechanic at heart which is, yes, made worse by a high regulatory burden, but that burden does not directly lead to more expensive trials.

There are some indirect effects of too much regulation, and at the very least it may have contributed to more investigators quitting their jobs and decreasing supply. They also contributed to regulatory capture: part of the reason why industry has been overtaking academia for the better part of this century is that it’s better at dealing with dealing with bureaucracy. But again, these costs pale in comparison to direct clinical trial costs.

Another nit I could pick is the author’s very limited view of epigenetics: if more people read C.H. Waddington maybe we could find a better mathematical model to interrogate gene regulatory networks, which are a much more important part of the epigenetic landscape than the reductionists' methylation and the like. But I’d better stop before I get too esoteric.

If there had been a webpage monitoring the progress of the actual moonshot in the 1960s, it would have said stuff like “we built a rocket” and “we figured out how to get the landing module back to the ship.” In 1969, it would have just said, “hello, we landed on the moon.” It would not have said, “we are working to establish the evidence base on multilevel interventions to increase the rates of moon landings.”

This is Adam Mastroianni skewering the “science moonshot” initiatives, and rightfully so. If all we have to show for them are 2,000 papers full of mealy-mouthed prose, it was a ground shot at best.

Failure of imagination, drug cost edition

Here are a few facts we should all be able to agree on:

- The US has the highest drug prices in the world

- The US has the highest clinical trial costs in the world

- American drug R&D is so developed that it subsidizes the rest of the world

The disagreement lies in how these three are connected. If I interpret the Marginal Revolution school of thought correctly, drug prices are high (1) because America subsidizes all R&D (3) while having the highest clinical trial costs (2). So, 3 + 2 → 1 and limiting 1 to make drugs more affordable will lead to a negative feedback loop which would limit 3. This is why people who want to regulate down drug prices are “Supervillains”

This implies that most drugs approved in the US are (1) life-saving and (2) have no other alternative. My gut reaction, backed by no direct research but some insight in cancer drug effects and mechanism of action, is that this is not the case and that if someone were to perform a rigorous review of approved drugs they would see that most are me-too drugs with marginal benefit. That is not, however, the argument I’m trying to make here which is why it is relegated to the margin. responsible for future deaths of millions of people who won’t be able to benefit from the never-developed drugs.

But of course, if 3 + 2 → 1 were true, there are two more ways to lower 1: limiting the scope of R&D (3) — which wouldn’t be the first time — or, preferably, lowering clinical trial costs (2), which have ballooned out of all proportion thanks to a potent mix of

To expand on this, on the margin for now and in a separate post later: pre-clinical and phase 1/2 startups get billion-dollar valuations based on but a dream of success, which gets them hundreds of millions of dollars in their accounts, which in turn gets them to spend like drunken sailors on what should be low-single-digit million-dollar trials, which gets you to $>10M phase 1 trial, which borders on clinical trial malpractice. regulatory burden and oodles of money floating around the pharmaceutical/biotech space. The reason for all that money flying around? The promise of high payout guaranteed by unregulated drug prices! So: 1 + 3 → 2.

And if both of those relationships are true, well then there is a positive feedback loop in play, also known as a vicious cycle, and if there is one word that encapsulates the American drug cost landscape “vicious” is better than most.

The problem with high clinical trial costs isn’t only that they serve as an excuse for/lead to high drug prices. They also pose an impossibly high barrier for disconfirmatory trials that could get hastily approved but ultimately ineffective drugs out of the market (see Ending Medical Reversal). Because even in the world of Marginal Revolution’s hyper-accelerated approvals and early access to all, patients and physicians alike would need formal measures of safety and efficacy as a guide, and clinical trials are the ultimate way to do it. So we’d better have a quick-and-dirty way to do those too.

Unless the failure of imagination is mine, and in the Marginal Revolution world it would be an artificial intelligence sifting through “real-world” data for safety and efficacy of the thousands of new medical compounds and procedures blooming in this unregulated Utopia, perhaps even recommended and/or administered by LLMs who would finally bypass those pesky rent-seeking doctors. You have to see item number 6 to believe it. I did a double-take. Then again, maybe I shouldn’t take economists so seriously.

The Junk Charts blog sometimes links to charts that are not junk at all, today being one of those times. The link is to some beautiful storytelling on the many neighborhoods of New York City and now I wish the Washington Post had something similar for DC.

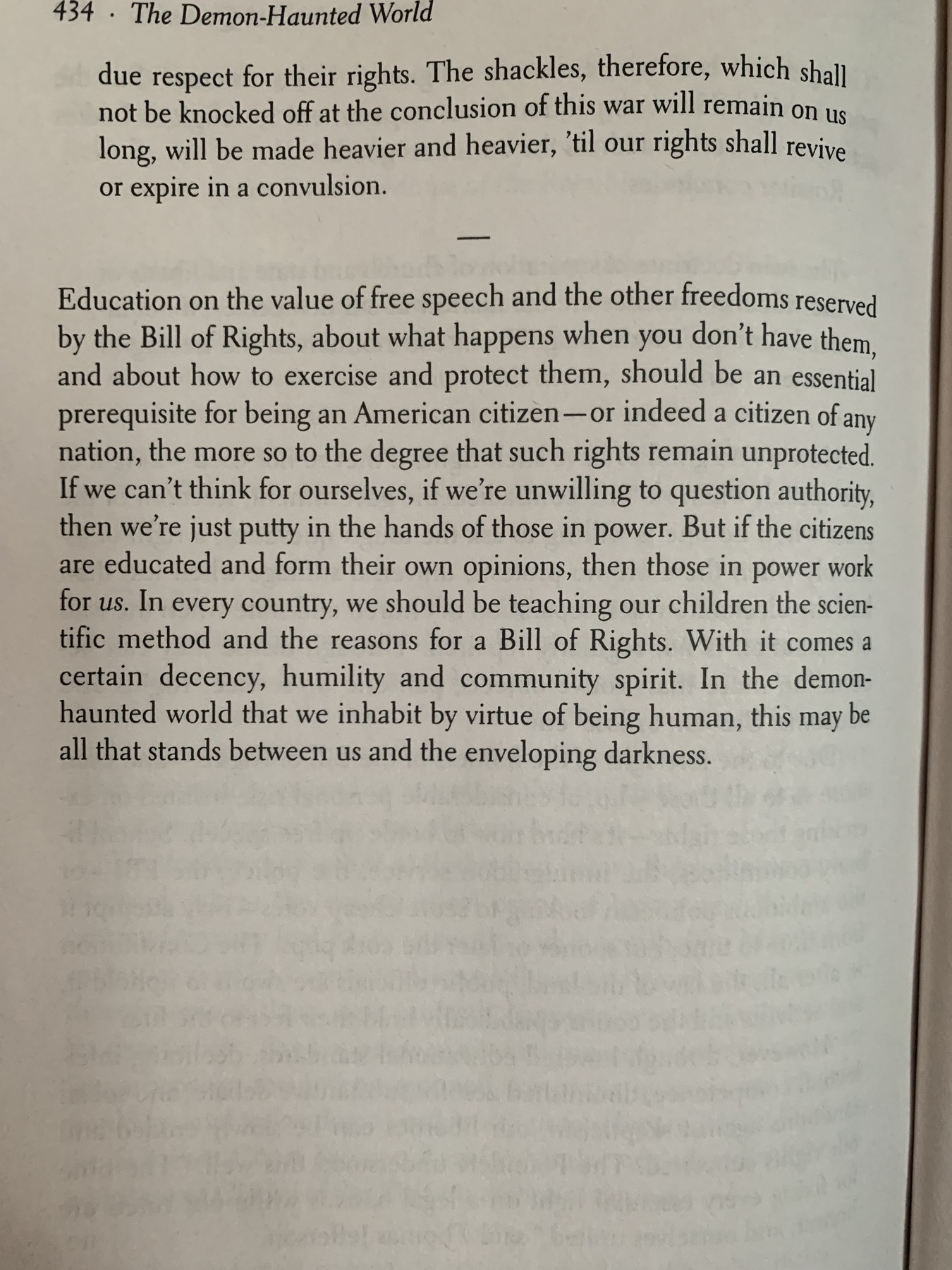

This page from Carl Sagan’s The Demon-Haunted World was common sense when it first came out almost 30 years ago. Today it reads like a revolutionary screed.

Some questions about biotech that Alex Telford finds interesting. The last one was my favorite.

This is from Alex’s blog. He also has a newsletter which doesn’t overlap, so best to follow both.

From the Antidotes to cynicism creep in academia:

Let me reiterate what I said before: when someone sends me a paper or a newspaper article about a paper, my view today is that the conclusions may or may not be valid. I don’t expect things to hold up just because this is a scientific paper (compared to a blog post), or because it is peer-reviewed (compared to a preprint), or because it is published in Nature or Science, or because it is published by a famous scientist. I think my view is reasonable and supported by evidence, at least in the fields I work in.

This is also my view, and applies equally to The New England Journal of Medicine, The Lancet and JAMA as it does to Nature or Science. They are for-profit businesses who will do things for profit, not for elevation of science. The article goes on to list the titular antidotes from the position of a tenured professor of psychology working in Europe. My own antidote to cynicism creep in academia was slightly different.