The first 24 hours with my new toy

Or should I say “our new toy” — as I’m writing this, the tween is poking and swiping her way through visionOS like she’s been doing it her whole life, while I am on the laptop. Not that I mind, since the only way to post to micro.blog on day 1 of Apple Vision Pro is through the online interface. It looks like none of my preferred writing tools — IA Writer, Ulysses, or even the micro.blog app itself — have even checked the box to allow unmodified porting of their iPad app, let alone made a native one.

The lists of essential-to-me software that’s Apple Vision Pro doesn’t yet have is long: OmniFocus and Asana for task management, NetNewWire and Reeder for RSS feeds, WhatsApp for keeping in touch with family in Europe. I am not a watches-videos-on-the-tablet-by-himself type of person, so missing Netflix and YouTube apps was not a big deal even though people seem to have made much of it. Having the almost-complete Microsoft Office 365 suite natively was a pleasant surprise, even though Word kept crashing and Teams kept defaulting to the useless Activity tab.

Note that I am taking the hardware tradeoffs and the “spatial computing” working environment for granted. This alone is a huge accomplishment: yes, yes, I can get from a grainy image of my apartment to the top of Haleakalā with a twist of a knob, now let me do stuff. And the doing of the stuff will be essential in dingy hotel rooms during business trips — of which there will be many this year — so I may as well start figuring out how to make the best of it. But until then, it is a toy.

So with that in mind, here is a list of first impressions:

- The dual loop band felt more comfortable on my head than the cooler-looking solo band, mostly because it stopped the entirety of the headset from resting on my nose.

- Setting up the screens was much less finicky than I thought it would be, and slipping them off an on quickly to, let’s say, use Face ID on the phone was seamless.

- No eye strain that I’ve noticed, but I haven’t used the toy for longer than 30–45 minutes at a time. I also don’t wear glasses and knock-on-wood never had problems with eyesight.

- The pass-through video is a marvel in that it didn’t cause any motion sickness, but it is so grainy and the motion blur from any head movement so obvious that I never felt like I was looking through glass, even thick glass of fogged up ski goggles.

- To walk back what I wrote above: pass-through video shines when it is the backdrop for the crisp app windows that can feel the walls around them and position themselves accordingly. In those moments, when your surroundings are in the peripheral vision, it does feel like those windows are actually floating in your actual room.

- But that doesn’t matter when the most common use case will be, I suspect, completely obliterating your real environment and doing work in a near-photo realistic 3D rendering of a national park. Or watching equally breathtaking immersive 3D scenes from Apple TV’s latest documentary.

- “Immersive 3D” is distinct from 3D movies, more like actually being there than looking at a diorama through a rectangular screen. I suspect it will change filmmaking forever, but then people have said that Segway would change the world so what do I know?

- Personas are as uncanny as you can imagine. If you ever wondered what you would look like as an NPC in GTA or a player in NBA2K, well, for less than four thousand dollars you can find out yourself.

- People who know that I had AVP when I called on FaceTime acted grossed out, those who didn’t ranged from slightly confused (a new haircut? a filter?) to unfazed (oh, you’re using an avatar… whatever for?)

- At the bottom of the uncanny valley the only way to go is up. Which is scary and deserves a post or two of its own.

- It’s the way of the future, for better or worse.

So if in 2007 everyone used their digital camera to take photos of the original iPhone, and in 2024 everyone used their iPhone to take photos of Apple Vision Pro, am I to infer that in 2040 we will all be taking photos with our headsets? Probably just naive empiricism, but I had to ask.

Nilay Patel at The Verge gave Apple Vision Pro a 7, the same score Meta Quest 3 got from David Pierce just 3 months earlier. And if you read their scoring guidelines it makes sense, a 7 is “very good; a solid product with some flaws”, 10 being “the best of the best”. But then of course a 7 is the best headsets can be right now, given the tech’s limitations, right?

Well, no. Oculus Quest 2 scored an 8 — “Excellent. A superb product with minor or very few flaws.” — so now I am confused. Why give out numeric scores at all if you will be so slapdash about it?

The Iconfactory’s Project Tapestry is interesting and pretty, but feels like reinventing the wheel and throws RSS under the bus (emphasis mine):

Blogs, microblogs, social networks, weather alerts, webcomics, earthquake warnings, photos, RSS feeds - it’s all out there in a million different places, and you’ve gotta cycle through countless different apps and websites to keep up.

What in the world are they on about? RSS feeds do collate all of this. How is what they want to do any better than textcasting? I can see how it’s worse — it would be view-only, without posting and editing.

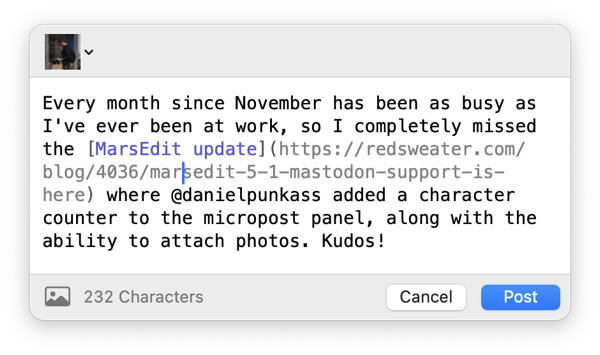

Every month since November has been as busy as I’ve ever been at work, so I completely missed the MarsEdit update where @danielpunkass added a character counter to the micropost panel, along with the ability to attach photos. Kudos!

Here are two products that work wonders for reducing travel anxiety:

- Anker Magnetic Battery, for when you want to charge your phone

- Anker 45W Wall Charger, for when you want to charge everything else

Both are small and affordable, especially if you set a price alert.

Bullet bit, and Kagi is now my default search engine. An unexpected benefit was their LLM, which gave good answers to a standard set of questions. Between Apple coming out with a new platform, services popping up left and right and a blog resurgence it’s like mid-2000s without the financial crisis.

Apple's App Store is not a walled garden, it's a dumpy casino

I have been reading with interest about the Epic versus Apple in-app payment saga, but have no respect for either of the parties. Epic, because they pretend to care about the developer ecosystem when all they want is to be the gatekeeper instead of the gatekeeper. Apple, because they claim higher ground, The words “Apple” and “higher ground” in the same sentence of course bring to mind Marco Arment’s essay, but the ground Apple lost 9 years ago has since been reclaimed. wanting to keep their walled garden pristine and free of bad actors, while the garden is in fact overgrown with weeds. Where the roses bushes used to be there are now squatter tents pitched, mangy dogs guarding the perimeter. The magnolias are on fire. Somewhere in the distance a mother cries for her lost child.

You see, our four-year-old has developed an interest in sea animals. Sharks in particular, but any saltwater organism will do. To nurture that interest, I scoured the iPad App Store for anything that a) features the ocean and b) is in the 4+ age category. I should have known better than to trust Apple’s own search, because the results were a disaster: “games” that let you play for all of 30 seconds before serving you a noisy add that can only be closed by tapping repeatedly on an 8-point sized barely visible white “x” even after the mandatory 1-minute lockout period when all you can do is watch low-resolution mock-ups of what may or may not be the game that you will get if you are successfully tricked into downloading whatever they are selling; or “edutainment” products that pop up offers for $99.99 in-app purchases after each tap; or once-reputable game developers who deck out their 4+-approved shark simulators with so many bells, whistles, and requests to buy their in-game currency that you may as well have sent your child to an Atlantic City casino. And not one of the good ones.

In what universe, then, is Apple’s repeated shakedown of Hey not simply evil? Why is the company protecting its adult users from the horrors of an app that is unusable without an externally created account, but is just fine with four-year-olds being bombarded with advertisements and offers for double-digit in-app purchases of worthless game credits? Having even half-way decent curation and a usable search screen would be ideal. Any one of those without the other would also be acceptable. As of January 2024, the iPad/iPhone App Store has neither, and it is a disaster. And Apple has the gal to charge 15–30% for the privilege of being listed in that pigsty.

If Apple’s other App Stores — Mac and AppleTV — are not like that, it is only because they are not worth the scammers' attention. In less than 24 hours I will log into a different Apple store and pay almost four thousand dollars — about a thousand more than my first ever monthly paycheck as a medical resident a dozen or so years ago — for the privilege of playing with their new doohickey. I can only hope that Vision Pro does not become too popular: a 360° full-immersion experience of the iPhone/iPad App Store would not be pretty, though lovers of gross-out horror may be appreciative.

The Washington Post has your weekend reading covered: “He spent his life building a $1 million stereo. The real cost was unfathomable”.

The faded photos tell the story of how the Fritz family helped him turn the living room of their modest split-level ranch on Hybla Road in Richmond’s North Chesterfield neighborhood into something of a concert hall — an environment precisely engineered for the one-of-a-kind acoustic majesty he craved. In one snapshot, his three daughters hold up new siding for their expanding home. In another, his two boys pose next to the massive speaker shells. There’s the man of the house himself, a compact guy with slicked-back hair and a thin goatee, on the floor making adjustments to the system. He later estimated he spent $1 million on his mission, a number that did not begin to reflect the wear and tear on the household, the hidden costs of his children’s unpaid labor.

So it goes…

Middle school smart phones

There is a big change in how Generation Z and whatever follows deals with technology, and of course it is parent-driven. Our eldest is a 6th-grader and we are among the youngest, if not the youngest, parents in her class. We were both in our late 20s when she was born, which was considered geriatric in Serbia but is practically a teenage pregnancy for DC standards. While most of her classmates have smart phones, she is not getting one until she has a driver’s license — whenever that happens — and will have to live the hard-knock life of Apple Watch at school and the iPad at home.

To our older friends this is nearly child abuse. However will they develop socially, they ask, as if TikTok were a social network and grammatically incorrect emoji-laden texts the only means of communication. Won’t they miss out on important interactions… on their way from home to school and back, I guess? Friends our age and younger don’t yet have middle schoolers, but most agree with our stance. Dumb phones and/or smart watches are good enough for safety. Roblox et al on a tablet can replace the hour-long conversations over landline phones of generations past. So what need exactly does an iPhone fill, other than assuaging the fear of missing out?

Not everyone in a generation is the same, of course, but there are overall tones. In keeping with the economic hardship, stunted maturation, and the general pessimism of the millennials, I predict our tone to be “not so fast, young’uns”. By the time our youngest is in 6th grade, seven years from now, the smartphone tweens should be in the minority.